Google Gemini 2.0: Unveiling the Next Frontier in Agentic AI

Google's Gemini 2.0 introduces advanced AI agents with multimodal capabilities, enabling autonomous task execution and seamless real-time interactions across text, images, and audio.

I was trying to write about the article, but I was confused as to whether I should write about the recent release by ChatGPT (GPT Canvas) or Gemini 2.0 by Google. It was a tough decision. Then I thought about it - ChatGPT is playing a catch-up game with the launch of GPT Canvas, which is somewhat similar to Claude Artifacts. Some features of ChatGPT Canvas are better than those of Claude Artifacts, but I would like to set that aside for now. Today I want to highlight Gemini 2.0, the recent release by Google, and it's simply mind-blowing.

Evolution of Google Gemini 2.0

The previous models of Gemini 1.0 and 1.5 Flash and Pro were stepping stones for introducing multi-modality, where you can manage image, audio, and video. But Google Gemini 2.0 is a bit different, and that's going towards building agents.

Advancements in Gemini 2.0

I think that focusing on building agents using Gemini 2.0 brings some pretty amazing advancements and features.

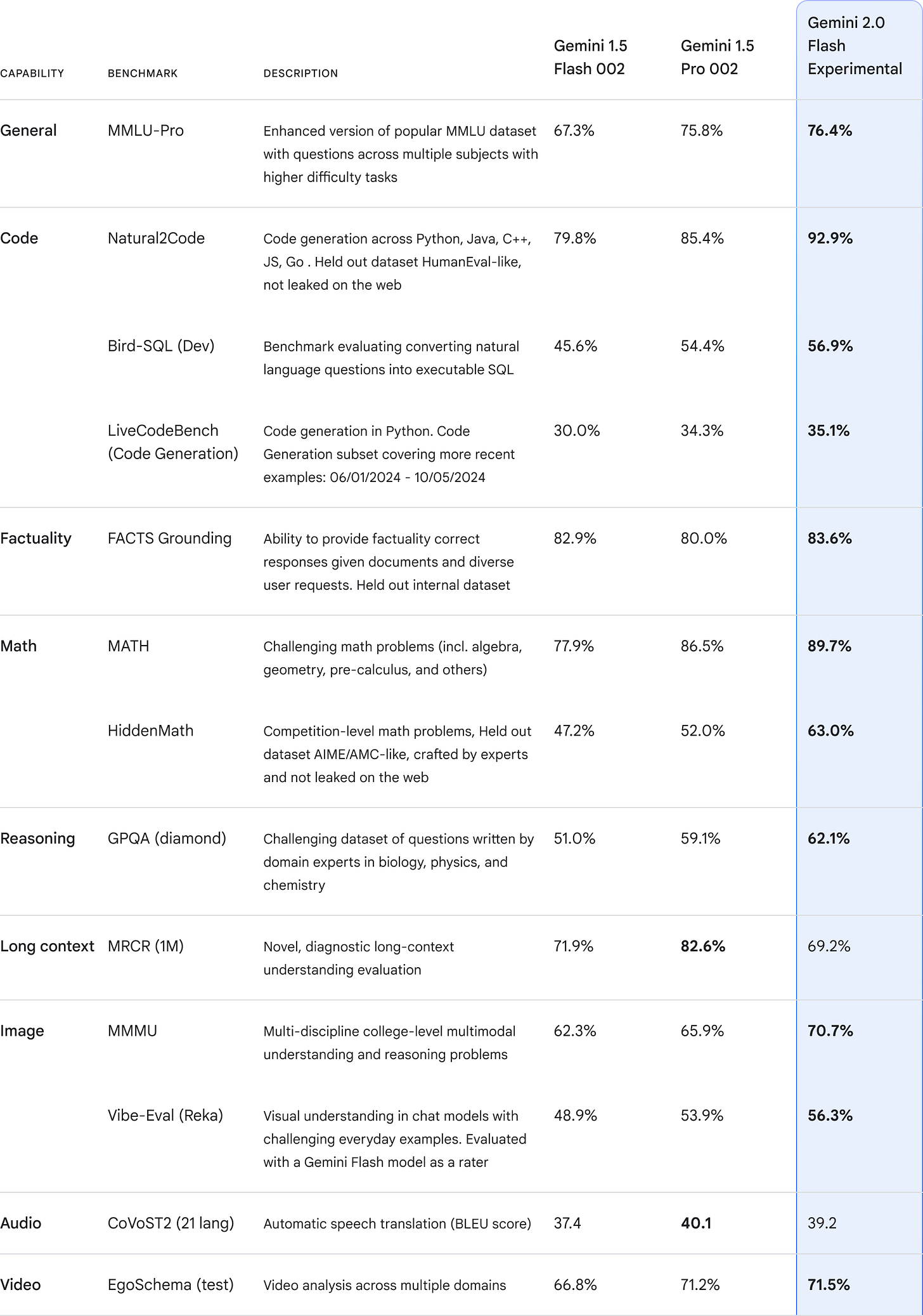

As expected, Gemini 2.0 Flash outperforms 1.5 Flash on multiple benchmarks. For example, it outperforms on code generation: 1.5 Flash was at 79.8, and 2.0 at 92.9. Similarly, they outperform significantly on multi-modal language MLLU Pro: Gemini 1.5 Flash is at 67.3, and Gemini 2.0 Flash (experimental) is at 76.4.

Multi-Modal Capabilities

In addition to supporting multimodal inputs (like images, video, and audio), 2.0 Flash now supports multimodal output (natively generated images mixed with text, steerable text-to-speech, and multilingual audio). It also natively calls tools like Google Search, code execution, as well as other 3rd party user-defined functions.

Top 3 Projects of Google Gemini 2.0

Top 3 projects by Google Gemini 2.0 that got me exited are Project Astra, Project Mariner and Joules AI - agents for developers. One more cool project that the game lovers will enjoy is the Agents for games, where agents will help you play as well as play along with you.

Project Astra

It is a research prototype with a focus on building a universal AI assistant. Project Astra can be used on your mobile phones, especially Android phones. You can have real-time conversations with it. It has a memory that can store information. It has greater tool usage, like interacting with Google Maps and Google Search. You can check out the video below to understand its potential capabilities. It's a must-watch!

Project Mariner

Project Mariner is a research prototype focused on building agents that can accomplish complex tasks. It has the ability to understand the information on your browser screen, including pixels and web elements like text, code, images, and forms, and then uses that information via an experimental Chrome extension to complete tasks for you. It's still in its early stages, but this video, which is below, can give you a taste of what's coming next from Google.

Jules: Agents for Developers

Jules is another agent developer being built by Google. I believe they're trying to catch up with what Codeium’s Windsurf and Cursor, can do with agents for development. This, I believe, is a catch-up game here. Hopefully, they will be able to build agents that can seriously accomplish fantastic tasks, considering it is Google who is trying to build and get it out. Jules is an experimental AI-powered code agent that integrates directly into a GitHub workflow.

Check out my previous blogs that I have written

Real-Time Streaming and Interaction

Gemini has also launched several standout features as part of its real-time streaming capabilities, similar to what Chat GPT has launched:

Interactive Browsing: Engage in live conversations with the AI while interacting with your screen, asking questions as you browse.

Real-Time Conversations: Speak directly to Gemini by switching on your webcam and asking questions, receiving immediate answers and feedback.

Screen Sharing: Share your screen with Gemini and ask detailed questions about the content displayed. Gemini can analyze the screen in real-time, read out information, and provide explanations.

These features collectively make Gemini a powerful tool for seamless real-time interaction, as demonstrated in the example video below.

Starter Apps in AI Studio

This is a must-try. You can go to the starter app sections in your AI Studio at Google.com and you would find three interesting starter apps: Spatial Understanding, Video Analyzer, and Map Explorer.

Spatial Understanding: You can give it a bunch of photographs, and it can do your annotation for you.

Video Analyzer: Upload a video or provide a link, and it can summarize, describe the scenes, extract the text, and perform various other tasks.

Map Explorer: Explore places in the world using Gemini and the Google Maps API. It can help you identify the best places.

These are three very good starter projects which you can surely try. You can try them out right in AI Studio from Google.

Final Thoughts

Till now, I have not been a fan of Gemini or any of its products. I have been mostly a user of Claude and ChatGPT and Perplexity. But the recent release has actually got me pretty excited, and I'm looking forward to what Google Gemini will be coming up in the coming days.

I am very bullish on the agent tech capabilities like Project Astra and Project Mariner, hopeful to see some really interesting projects coming out from here!